Ai Drawing Generator Free Online

Revolutionizing Drawing Generation with AI

Introducing Ai Drawing Generator

Input Image

Output Image

Key Features

ControlNet, as proposed by Lvmin Zhang and Maneesh Agrawala in "Adding Conditional Control to Text-to-Image Diffusion Models," introduces a neural network structure designed to enhance pretrained large diffusion models by incorporating additional input conditions.

Experimentation and Compatibility:

In practical implementation, it is advisable to utilize the checkpoint associated with Stable Diffusion v1-5, as it has been specifically trained on this version. Notably, this checkpoint demonstrates experimental compatibility with other diffusion models, such as dreamboothed stable diffusion. This interoperability provides users with flexibility in choosing diffusion models based on their specific needs and preferences.

Image Processing and External Dependencies:

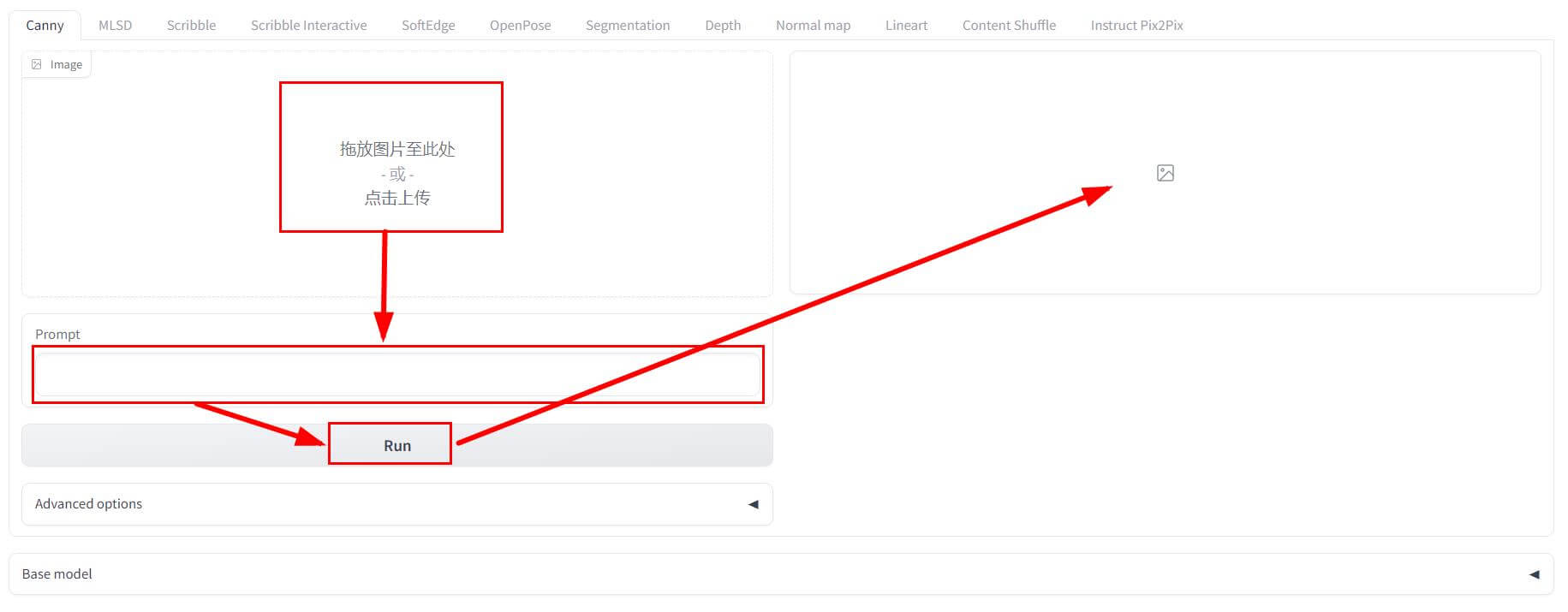

How to Use Ai Drawing Generator

To convert scribbled drawings to images using Ai Drawing Generator, follow these simple steps:

- Step 1: Upload scribbled drawings - Select and upload the scribbled drawings to be converted to images. make sure the photos are in a supported format and meet any size requirements.

- Step 2: Write a brief description based on your uploaded scribbled drawings, the more detailed the better, describing the color of the background of the image you want to generate, etc.

- Step 3: Wait for images to be generated - Once the scribbled drawings are uploaded, the model will process them to generate images. This process may take some time depending on the complexity and length of your scribbled drawings.

- Step 4: Download the images - Once the images have been generated, you are ready to download them. Check the quality and make adjustments or regenerate the image if necessary.

Note: scribbled drawings generates high-quality images in a research preview stage and is primarily intended for educational or creative purposes. Please ensure that your use complies with reasonable and lawful purposes!